Overview

Gfarm (pronounced "G-farm") is a wide-area distributed file system that has been under continuous research & development since around 2000. It bundles together local storage from multiple PCs & multiple PC clusters distributed across wide areas to function as a large-scale, high-performance shared file system.

Users can access data through a single virtual directory hierarchy without being aware of where the data is actually stored. This mechanism meets the needs of fields such as physics, astronomy, & life sciences to efficiently process & share extremely large datasets ranging from terabytes to petabytes.

Gfarm ensures transparency & security through ongoing research & open-source code availability. The NPO OSS Tsukuba Technical Support Center promotes technical support, advanced maintenance & construction support, & sharing of technical information for this Japanese-originated OSS centered on the Gfarm File System.

Gfarm System Configuration (3 Types of Hosts)

The Gfarm File System is primarily composed of the following three types of hosts (computers), though a single host can serve multiple roles if the number of available hosts is limited.

- Client

- Hosts that use the Gfarm File System.

- File system node

- A group of hosts that provide actual data storage space for the Gfarm File System. The system is designed to support configurations with thousands or tens of thousands of nodes distributed across wide areas, with an I/O daemon called gfsd running on each node. These nodes typically also serve as clients using Gfarm.

- Metadata server

- A host that manages metadata for the Gfarm File System (information such as which files are stored where). It runs a metadata server daemon called gfmd & a backend database such as PostgreSQL.

Features & Benefits

The Gfarm File System is like "a massive, fault-tolerant data repository accessible from anywhere." It incorporates unique innovations to meet the needs of diverse targets, including researchers handling large-scale data & IT administrators who prioritize stable system operation.

1Peace of Mind That Data Won’t Be Lost

Important data is replicated in multiple locations, making it resilient to disasters.

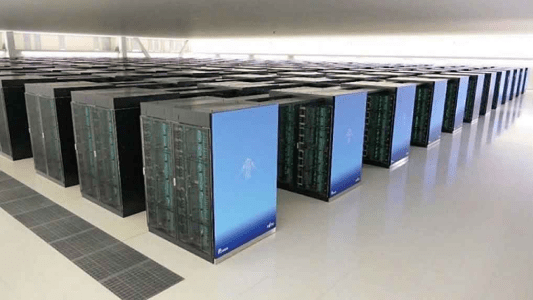

Data is not stored in just one location but is replicated in multiple remote locations. For example, in the “HPCI Shared Storage” for supercomputers, 100 petabytes (an extremely large amount of data) of files are replicated and stored in two locations: Kashiwa in Chiba Prefecture and Kobe in Hyogo Prefecture. This ensures that even if a problem occurs at one site, data can continue to be accessed from the other, preventing system downtime.

2Unlimited Expansion Potential

The system can be expanded without downtime even as data volume increases.

Storage can be increased or decreased without stopping the system. This allows data storage capacity and processing power to be expanded or reduced as needed. Additionally, the number of replicas and replica placement locations can be freely configured according to data importance and access frequency, making it possible to avoid concentrated data access.

3Secure Access from Anywhere

Data can be used from anywhere in the world as if it were on your own computer.

Unlike ordinary file systems, Gfarm’s greatest feature is that it uses the Internet to enable secure file access even from remote locations. Like cloud storage, you can access your files from anywhere.

4Guarantee That Data Won’t Be Corrupted

Invisible data corruption (silent data corruption) can be automatically detected and prevented.

This is a function that guarantees data integrity (that data is not corrupted). This enables the discovery and prevention of problems called “silent data corruption,” where data becomes corrupted without any error messages. It prevents users from unintentionally accessing corrupted data, and the system automatically responds to such malfunctions.

Differences from Other Solutions

- As a domestically developed file system with open-source code, it provides peace of mind. With over 10 years of operational track record in HPCI Shared Storage and JLDG, and with necessary features being continuously developed, it can accommodate a wide range of requirements. It addresses silent data corruption and has automatically detected and repaired numerous instances of corrupted data to date.

Use Cases

Gfarm is used for data utilization on the supercomputer "Fugaku" & in cutting-edge research fields (particle physics, astronomy, etc.).

- HPCI Shared Storage

- The lifeline of research infrastructure! A highly reliable data sharing platform achieved through geographic distribution & redundancy

- JLDG (Japan Lattice Data Grid)

- Supporting the forefront of physics! An international data grid realized with Gfarm

- Subaru Telescope Data Analysis

- Initiatives that significantly improved processing speed by leveraging Gfarm & Pwrake

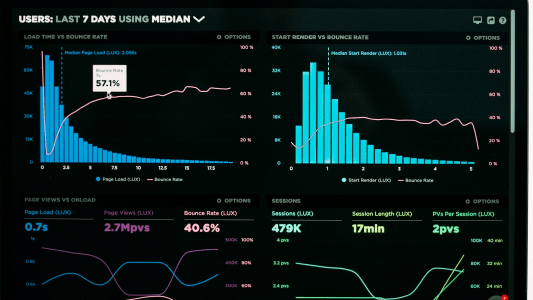

- NICT Science Cloud

- Enables real-time processing, high-speed data visualization, & instant analysis of big data

Technology & Development

Gfarm enhances convenience through secure OAuth/OIDC authentication, the "Gfptar" small file batch transfer function, & Nextcloud support.

- OAuth/OIDC Authentication

- This mechanism enables more secure access to the Gfarm file system using a new login method.

- Gfptar

- Parallel transfers large numbers of input entries (files) while automatically consolidating them into multiple archive files within the output directory.

- Nextcloud Support

- By accessing shared storage directly, users can more easily utilize data on Gfarm.

- Gfarm HTTP Gateway

- A mechanism enabling access through the HTTP protocol, widely used in web applications.

Installation & Configuration

To deploy Gfarm & build a large-scale research data infrastructure, configuration of metadata servers, file system nodes, & other components is necessary. The Gfarm File System consists of host groups including clients, file system nodes, & metadata servers. Details are provided in the "Installation Manual" & "Setup Manual". These manuals are available in GitHub repositories & other resources of the NPO OSS Tsukuba Technical Support Center’s related communities.

Basic Configuration Flow

Installation & configuration work is performed using Gfarm management commands & configuration files.

- 1. Initial Metadata Server Configuration

-

- Decide on an administrator username & configure it using the config-gfarm command (with root privileges).

- This configuration sets up & starts the backend database (e.g., PostgreSQL), creates configuration files (/etc/gfarm2.conf, /etc/gfmd.conf), & starts the metadata server gfmd.

- 2. Authentication Method Configuration

- For example, when adopting shared key authentication, create a _gfarmfs user for authentication with file system nodes & generate an authentication key (e.g., using the gfkey -f command).

- 3. Automatic Startup Configuration

- Configure the metadata server (gfmd) & backend database (e.g., gfarm-pgsql) to start automatically.

- 4. Client Configuration & Operation Verification

-

- Install the gfarm-client package that contains Gfarm management commands.

- Use management commands such as gfls (display directory contents), gfuser (user management), & gfgroup (group management) to verify configuration & operation.

Source Code

Various source codes can be accessed below. Source code links lead to "GitHub".

- Gfarm File System

- Gfarm File System server & client

- Gfarm2fs

- Program for mounting the Gfarm File System

- Nextcloud-Gfarm

- Nextcloud container supporting Gfarm external storage

- Gfarm-hadoop

- Plugin for using Gfarm with Hadoop

- Gfarm-samba

- Samba plugin for using Gfarm from Windows clients

- Gfarm-mpiio

- Plugin for MPICH & MVAPICH to use Gfarm with MPI-IO

- Gfarm-gridftp-dsi

- Plugin for GridFTP server

- Gfarm-zabbix

- Zabbix plugin for Gfarm fault monitoring

Documentation

Support

Established to provide technical support for Japanese-originated open source software (OSS) centered on the Gfarm File System, we offer advanced maintenance & construction support & share technical information. For details, please see "Join & Support".

Frequently Asked Questions

General

- When is Gfarm useful?

- It allows you to safely store important data. It meets various requirements such as sharing large amounts of data among multiple users.

- Can individuals use it?

- Is security adequate?

- How much can data capacity be increased?

- Will data become corrupted?

- Is support available?

- What does open source mean?

- What is Gfarm’s license?

- What is Gfarm?

- Where can I download Gfarm?

- Is there a mailing list?

Technical Information

- How much memory does gfmd consume?

- How much disk space does the machine running gfmd consume?

- How many descriptors should gfmd use?

Security

- Can Gfarm be operated safely in an environment not protected by a firewall?

- What are the differences between authentication methods?

Troubleshooting

- Cannot connect to file system nodes or metadata server.

- Authentication errors occur during file access or file replica creation, or "no filesystem node" errors occur.

- A file system node’s disk has crashed. What should I do?

- File modification times seem incorrect.

- How can I collect core files when gfmd or gfsd terminates abnormally?

- I have InfiniBand but RDMA doesn’t work.